In any music there are elements placed in the mix that we hear, in a rock music for example, you have drums (kick drums and toms, snares, hi hats, crashes etcc…), you have lead and rhythem guitars, you have bass guitar, you have vocalist and there might by keyboards(synths and artificial noises) or other string or brass instruments in the mix.

We don’t hear all these instruments in one place, but the dude who mixed the music has recorded, eqed and placed these instruments in a virtual imaginary box in between the speakers, and used tricks so we hear the instruments left or right or further or closer to us. the point is, sound stage is mainly an illusion, no matter if we talk about iems or speakers soundstage, its mostly tricks of sound spl/time of the sound delivery between the speakers. sound engineers use different methods to achieve soundstage like:

- Panning. in panning they place different elements either in left, or right, or in between of the sound field using different digital audio workstation softwares by tweaking the volume or phase differences between the left/right speakers .

- Wet sound and dry sounds, more echo(wet ness) means further away. less echo, hum (dry sound) means closer to us.

- Also there is other trick to emulate soundstage depth and that understanding how sounds travels in distance. high frequencies have short and kinda aggressive looking wave lengths that causes these frequencies to friction more with the air and travel shorter than the lower frequencies. when you hear an instrument or a pair of speakers up close, you hear the higher frequencies better than when you hear them at a longer distance so you can use this trick with lower volume trick to make an element ( like snares or vocals) feel deeper or further in the mix.

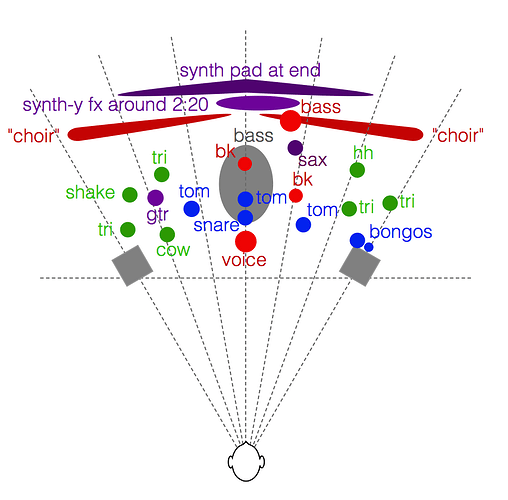

https://youtu.be/cyv5-YLe4Qw?si=MpElBtTS53_GNqrl

this is an example of how sound stage looks like being created using all these different tricks.

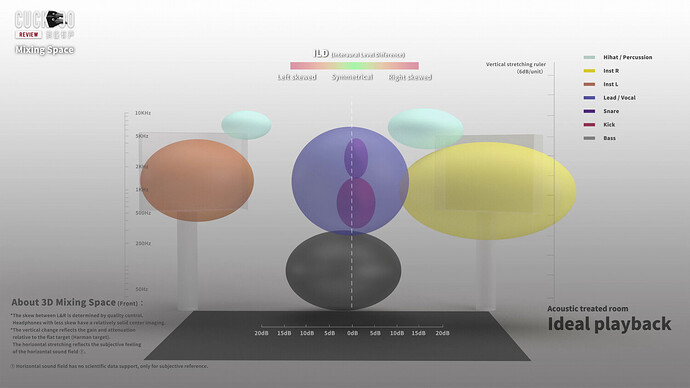

So how to get the sound stage as close as you can get to the way the mix engineer intended it in speakers? you can place the speakers at the ideal form in a symmetrical room and place different absorbers and diffusers(matching the artists room ) in various locations so you get closer but never matching to the way artist thought , heard and made the music( different rooms, tools and hrtfs between you and the artist).

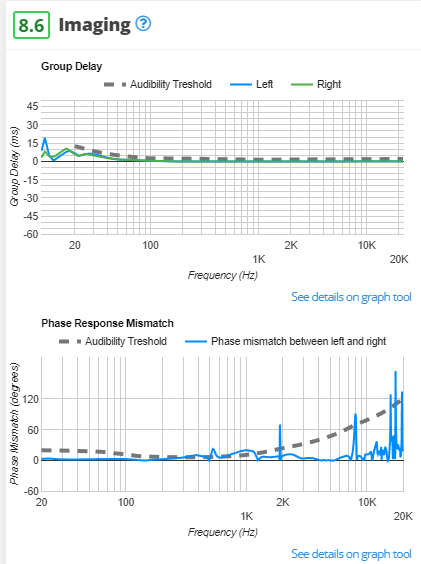

But how about iems? how can you get as close to neutral and transparent stage? or a bigger stage? first there are hardware associations with imaging and soundstage, the flatter the phase response and less group delay between 2 pairs results in a more balanced and transparent way of reproducing sound stage and imaging, but the rest is pretty much frequency response.

In iems you don’t have any sound depth so music would appear inside your skull compared to the 2 channel that stage would appear in the 180 degree space in front of you.

But there are similarities to the speakers here and i can’t say witch one really feels better to the ear. in iems you hear the kick drum usually in the middle of your skull compared to the speakers that is placed near and close to you(middle) in the mix. you would hear the vocals or the snares in the middle maybe a millimeter or 2 further than the bass but in speakers you can experience it as close or further away meters from you( however the middle placement is similar), you hear the guitars or supporting instruments in sides of the speaker but in iems you hear it at the left/right of faar faar left/right of your skull( sometimes it so far at sides that you might perceive it as out of head sound).

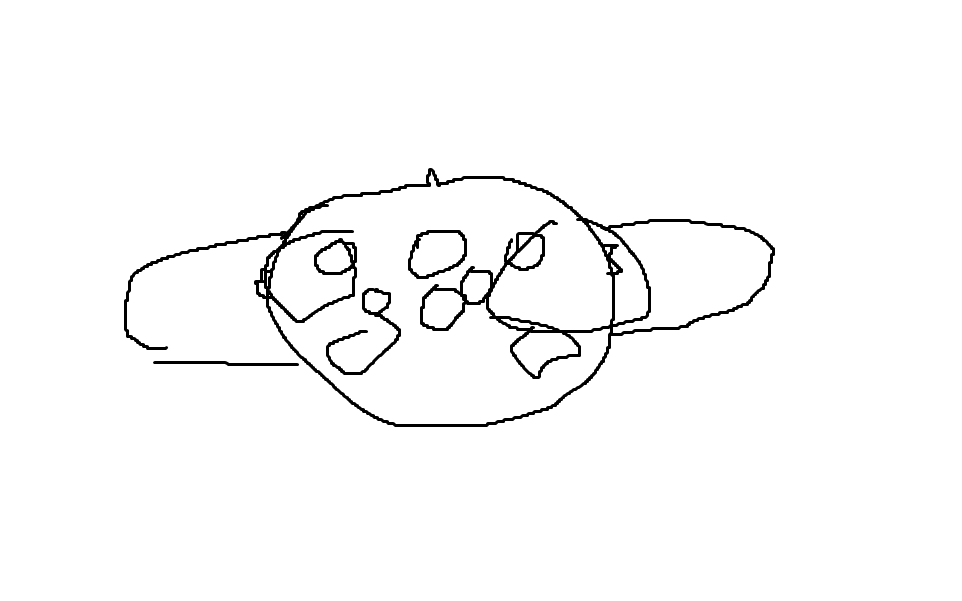

in the speakers things would appear infront of you but in iem things would happen in your ears with roughly the same placements

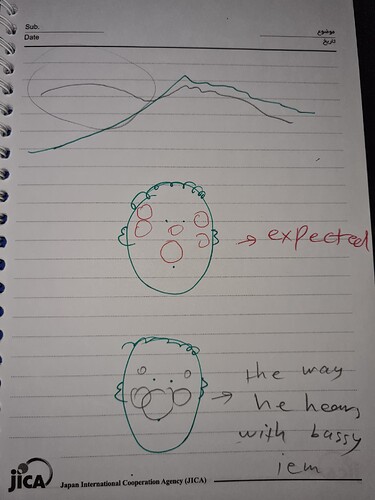

speakers

vs earphones ( top view of a human), the circles are the different elements placed in the mix.

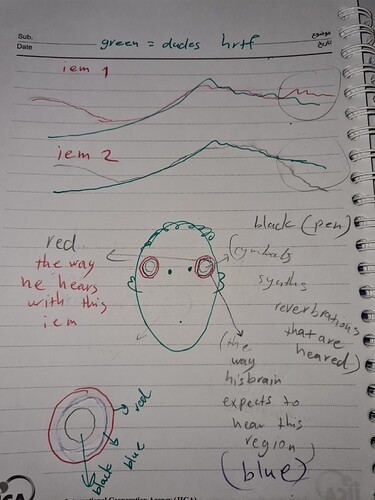

When the iem that is replaying the music inside the ears got lower spl in one area than the listeners hrtf, the person would hear that area more quite and subdued. here i drew a green dude, he looks traumatized because i have been using him as the test subject in my basement all day long. i drew 2 different iems that are similar in response up to 10khz so basically one is brighter than his hrtf (red) and other one is darker than his hrtf( black pen). he listens to those 2 and what happens? the pic below is his stereo imaging of his ears around upper frequency (the circles i drew and magnified at the bottom is the treble area). the red iem with more treble results in a bigger space than his expected hrtf around this high frequency area so he would hear the high frequency instruments louder than the other frequencies( one might associate that with closeness, or one might associate that with airiness and realism and would call it " a bigger sound" , one might hear the treble louder and treble is usually at the far sides of the ears so he would assume when the spl is high, the overall stage is big, soundstage is somehow an illusion and the meaning of it differs to individuals after all).

Or here, the guy expects certain level of low frequency space and spl in his head (red pen circles) but this is how he hears the lower frequencies with this iem that is very dark tilted compared to his green hrtf ( lower head pic drawn with pencil is the way he hears the iem). notice the lower frequency circles are bigger meaning that they take more space in his head so he hears bass instruments far higher than the treble and the midrange.

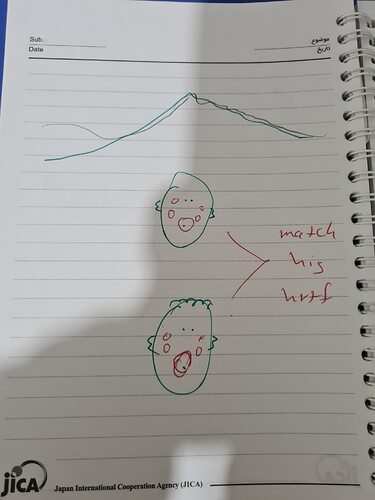

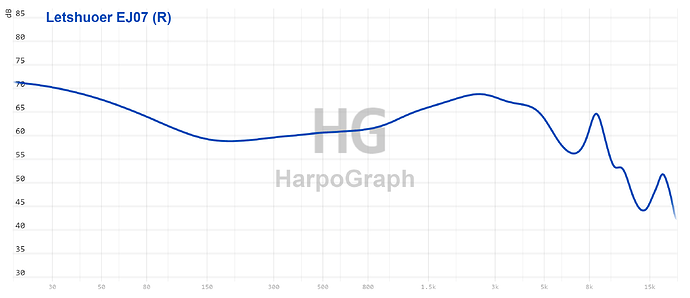

In the last pic of below the iem matches his hrf well (with the exception of subbass boost to emulate that speaker feel), and he hears the instruments in the space inside his head well. no more loudness in any area over others. no weird boost or cuts wouldn’t make different areas sound quite or louder. if you manage to achieve this with eq or any random iem thats close to your hrtf with its main tuning the stage would appear harmonious thus more “holographic” or more 3d for you since iem stage is indeed 3d unlike the speakers that are deep in front of you. to my ears having a rising df or harman 2017 like midrange helps with creating a balanced and holographic in head stage immensely (maybe thats the reason many think ej07 got 3d stage, notice the similar midrange rise in its fr that is similar to all hrtfs, this is just a personal guess ofc)

So boosting or reducing each region would result in a louder /quitter effect in those regions. when the ear and brain demands certain level of midrange spl but you don’t provide, the brain hears the midrange quitter and quitter means smaller in the head and one might associate lack of intimacy with bigger space or one might associate the quietness with distance. that implies for other frequencies like treble as well, imagine the brain demands 13 db treble boost over 500hz, but you provide 7 db of treble boost , the result would be a quitter therefore a smaller space in the head around upper region of instruments or reverberations. one might associate that with realism hence bigger stage, or one might associate intimacy with that hence smaller stage.

knowing these would help us make soundstage illusion tricks in iems using eq or different frequency responses compared to our specific hrtf.

- due to the hrtf differences, we do hear different frequencies slightly different. i do hear the bass, mids and the treble on song x replayed on a flat speaker slightly different than you, therefore my ideal soundstage differs to you. so re created in head soundstage in iems is mainly personal and depends on ones special hrtf outside the hardware quality of the iems( most iems have passable hardware anyways, if you visit rtings site and inspect the imaging section of iem reviews, you can see most iems have fine hardware). listening to other reviewers with their own hrtfs and different mental image of sound stage describing “soundstage” of an iem is like pouring water inside a blender and blending it hoping something would happen.

- in iems we can boost a region compared to our hrtf to make it higher spl therefore appear bigger in our head.

- we can reduce a region compared to our hrtf to make that region quitter so it would take less place in the head.

- intact, excess or lack of upper harmonics affect the sense of realism or distance. excess amount of high frequencies would make the sound intimate or high spl (harsh), proper amount of it to ones hrtf would make the sound balanced and less treble than ones hrtf results in quitter cymbals, shakers, reverbs, therefore smaller space in ones head.

- reverbs and echoes can trick the brain that sense of stage massively.

- we know that if we boost a region, that region would take more space in the head, this helps us know that when a song contains many lower frequency content, boosting lower frequencies would results in boost in many lower frequency instrument that are playing at the same time hence the stuffed or muddy sound, or boosting the treble when we have so many upper frequencies instruments playing at the same time results in a stretchy, overly intimate and clashing of those instruments.

- we know that our brain generally associates quite with further, and darker with further so when people say something sound open they might imply there is a lack of high frequency or midrange boost compared to their hrtf.

if you know more then add to this thread below, thank you.