Everyone here heard these, some of you may even be keen believers in these. Raging from “magical coldness” added to the sound (compared to full analog), to “stair-steps audio” and through extension to “hi-res” audio being better since “it has more resolution so it has to be better”, the digital audio has been plagued with myths since its dawn in the last century.

Many people imagine audio like an image, which is made of pixels. The more pixels it has, the better it looks. So, it seems natural that “resolution” in digital audio works the same, right? Wrong.

To start with the conclusion, standard lossless CD quality (or FLAC, WAV) of 16/44.1 is 120% of what any human is able to hear. It is a PERFECT representation of the original recording limited to 22 kHz, since sound waves don’t need an infinity of points to be perfectly represented.

This stuff was known since 1950, so why is it still not common knowledge these days?

This is the most comprehensive and trustworthy single piece of information about digital audio that I know. He will thoroughly educate you in the way digital audio works. Anyone that wants to “know” rather than “believe” their very placebo-prone ears will want to watch this video.

To summarize a few key points:

-

Standard CD quality of 16/44.1 is a perfect representation of any audio that is recorded, limited to 22 kHz. I am in my twenties and I can hear at most 17 kHz. I don’t go to concerts and don’t listen to music loudly, so other people at my age may hear even less. Which means that this audio quality is too much for pretty much anyone that is old enough to actually enjoy music.

-

“Hi-res” just increases that limit to 44 kHz or 88 kHz, THAT’S IT. Everything bellow 22 kHz is identical to standard CD lossless;

-

There is no lossless format more lossless than other (duh). FLAC, WAV etc just differ by the amount of file size they waste;

-

Audio is no image, you don’t hear more stuff if it has more resolution. You only need 44k samples per second to perfectly represent up to 22 kHz of audio.

-

The purpose Bit-depth (16, 24, 32) is to reduce the quantization error from conversion (resultant noise floor). 16 bit already puts the noise floor at a level of -96 dB, which means you need to listen to at least 96 dB loud before you hear any of the noise floor. Needless to say, your hearing is in immediate danger at that level, therefore 16 bits is all the bit depth anyone sane needs.

Practical test - the actual waveform difference from hi-res to standard CD, made with Audacity

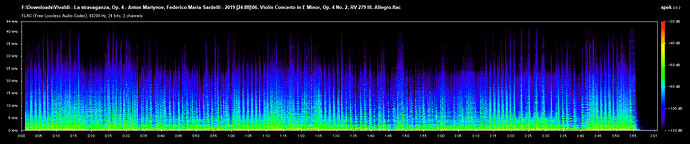

Hi-res 24/88.2 - 4x file size

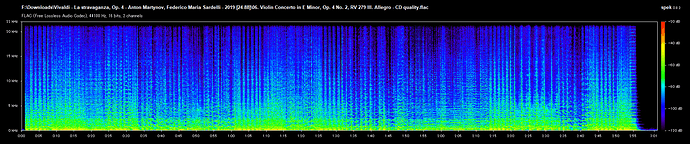

16/44.1

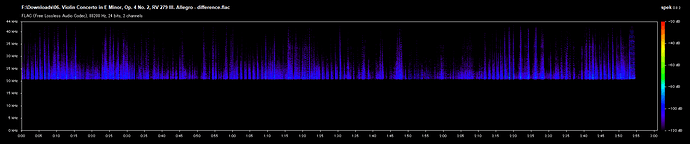

The sample by sample difference between the two

Hear for yourself, this this the difference file. It’s the real deal, you can see the spectrum for yourself. Has audio info, nothing is audible. Maybe your dog will be able to enjoy it ![]()

https://drive.google.com/open?id=1G_cRuXoOCcCHt6N48ghtZdTUQxBhvakX

Even if it was in the audible frequency range, the difference is so faint that it is almost non-existent. And this was with an actual Hi-res file. The usual case is that they have absolutely no information above 22 kHz (upscales), which makes them a total scam and waste of space.

The main point I am trying to prove here is that CD (16/44.1) is already perfect quality in the audible spectrum. As opposed to what analogue heads proclaim, having a finite number of points as samples doesn’t make the audio imperfect. Sound waves aren’t drawings. They can be represented with mathematical functions.

And as you can see, doubling the amount of samples does not affect the common area between the two at all. The only thing hi-res is capable of doing is reducing the noise floor (which is already stupidly low) and increasing the frequency range. These were originally intended for studio use, but they made their way into the hands of the consumers due to greedy marketing.