Niceee! I like the build, what case is that? Is it the Lian li lancool with the doors and stuff?

And how’s the card itself?

card is great ! nice and quite. it’s a phanteks p400 with a mesh front panel. I wanaa get a lancool 2 right now though

Some good news right now, slow start for the year but at least it is getting a bit better for me. My 3080 will have a replacement, instead of getting the same model that my family gave to me back then instead they will provide a 3080 that is a different version. I compared the 2 versions of the 2 video cards and turns out the replacement one will have a higher boost clock, the previous one that I own has a boost clock for 1755 while the other one has 1785.

I just hope this one will not break after a couple of days for I wanted it to last long, if not well, I guess 2020 is not yet over for me if that happens.

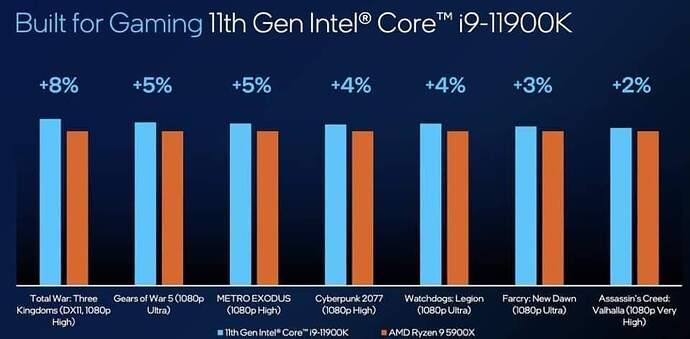

Seeing these benchmarks by Intel make me question. Why the fuck does 1080p performance matter for people who can afford this class of cpu? Like these guys are running 1440p or 4k for the most part.

It doesn’t matter for those people, it’s rather a benchmark of how CPU performance affects frame rate, as the GPU isn’t taxed, so it more clearly shows the effect of the CPU.

I get that. Just seems rather useless. When for gaming at this price bracket these figures literally don’t matter.

CPU performance doesn’t matter?

Well it does, but the number of people buying a 11900K and a 3080 or 3090 to game at 1080p… That’s a pretty niche market. If you’re playing at 4k it’s not a 5% difference anymore, maybe 1%.

well, by default if you’re getting a top end CPU, you already know it’s going to be a performer…but in order to see how they perform for gaming, you need to run the lower resolution in games to see how the CPU performs. it’s a tired metric, I agree, but a necessary one if you want to know how well the processor you chose performs for gaming.

Because at 4k, all 4-core >3.5GHz CPUs perform within measurement error.

Well what would be more logical (from a non-marketing perspective) is to show the results at 1440p and 4k, showing you’re not worse than anyone else, and crushing it in stuff where you can actually make an impact, for instance Machine Learning, video editing and 3D stuff.

However that would mean you have on less fancy barchart to show, one which is extra nice because I don’t believe a lot of people grasp the concept of reading the small letters on a chart and extrapolating that to a real world scenario and thus think that this large 8% will be noticed on their 4k screen.

I very much don’t think it’s necessary, however I get why they do it and I’m having a hard time blaming them because it’s just too effective.

Except Intel got that crown stolen from them with 2nd Gen Ryzen ![]()

not really because at 1440p and 4K, the GPU takes over and does the lions share of processing.

That’s really the sad part. Its misleading with honesty. If they wanted to compel people to actually buy their processor at this price point I’m pretty sure blender benchmarks as well as cinebench would be more compelling as even those 1-5% differences sometimes matter in the workstation scenario. unless of course , Intel didn’t beat AMD in that respect this generation and trying to win the gamers back with 1080p.benchmarks

my goodness guys. 1080p is the resolution you have to run gaming benchmarks at to test how good a CPU is at gaming. anything higher and the processing load shifts to the GPU. how many times does this need to be said for you to grasp why CPU reviews benchmark games at the lower resolution?

there are other metrics a CPU can be tested against…but those don’t indicate gaming performance, they indicate how well it is at that workstation task. sheesh…

We get it, the point is that it’s a meaningless metric since no one is (or at least should be) using this hardware at 1080p. I get that this is the only metric to measure “gaming performance” but that does not mean it’s a useful metric.

Everyone here knows what’s happening, everyone here understands the concepts, but not everyone here agrees with it. Right now intel can claim they have “Up to 8% better gaming than AMD” even though 99.9% of the people buying the hardware will not get 8% better gaming performance than the equivalent AMD processor because they are not playing at 1080p! In stead they get maybe 0-2% better gaming performance because they have not spend $2000 on a PC to play at 1080p.

Techspot, for instance, didn’t test the RTX 3080 at 1080p because nobody buys a 3080 to play at 1080p, making it a useless metric.

your comment shows you don’t get it Martward.

the 1080p metric is needed to see CPU gaming performance. at any higher resolution the CPU takes a back step and lets the GPU take over.

so benchmarks at 1440p and higher don’t show how good a CPU is, it shows how good the GPU is.

mein gott…

Matward, if you think it’s a meaningless metric, then how exactly do you propose that any reputable reviewer go about testing for gaming performance differences among CPUs? That is what this metric is used for. It is not necessarily applicable to a real world situation a user will be running at. It is a tool for comparing CPU X’s performance against that of CPU Y for the workload of a particular game. In order to do that you have to take the GPU out of the picture to the maximum extent you can and the way you go about doing that is to give it the “lightweight” workload of 1080p resolution so that it’s asking the CPU for data as fast as possible.

You seem to confuse not agreeing with you with not understanding.

True, but a reputable reviewer will mention that this is a unrealistic scenario and that you shouldn’t expect these difference at realistic resolutions. If I’d be a food reviewer specialized in reviewing donuts, I could make a metric whether I measure how easy they slide over my privates; wouldn’t be particularly useful to anyone but it’s definitely a way to distinguish between donuts. (This is an exaggerated joke comparison, just to show that not every comparison is useful)

Look, I’m not saying anyone should lose their heads or their jobs over this. Personally it kind of bugs me that this chart is used to claim an X% of “better gaming performance” and a lot of people don’t understand how this is tested. It’s fine, I understand why they do it from a technical perspective as well as a marketing perspective.

You are both completely right, I’m sorry that it annoys me ![]() .

.